#(the following category tag was added retroactively:)

Explore tagged Tumblr posts

Text

Bad news besties: Still not done even after sinking basically all weekend into trying and reasons why will become clear in a moment.

So for the curious, what I did end up doing over the course of weekend break was index all of the posts in bookmark form. This not only lets me keep track of which posts to separate as the ones that need tag-fixing and ignore new ones (who already proceeded with the new tagging system) and keeps me from having to go back and forth deepdiving Archives, know which posts I did and didn't do still, and it can also help me count posts that need fixing based on certain key categories.

-

In other words I got a neat stat chart counting how many posts were affected by the change and due for fixing, which counted up to this:

Note: The numbers might be skewed, due to various factors, including but not limited to: being bookmarked more than once cuz they fell under multiple criterias, being bookmarked more than once cuz I forgor, being technically counted repeatedly cuz the same post was reblogged multiple times in separate instances, posts that were mistagged in some way and might've been added or pruned (mistagged, typos, posts not relevant but picked up the name like tag-prompt asks, etc).

Commissions and Stores / Commissions / Stores (68)

Tech (103)

Sailor Moon (233)

MC Mobs (248)

OCs (1,130)

MCYT/MCYT Pets (1,491)

Fire Emblem (7,389) (Incidentally Fates and especially Heroes were the biggest chunk of the collective Fire Emblem posts)

Grand total: 10,662

-

As you can guess, this quickly went from an 'it'll be fine, I'll get this done in a few days as a quick art break' clean-up task to 'I'll get this done eventually, but it'll be a long and gradual grandfathering process' thing through sheer post bulk alone, so whereas I held back mentioning before of what changes to expect in anticipation I would do it once I do all the fixes, instead, I'm just gonna go ahead and hash out the specific changes now, and the retroactive changes will happen gradually while getting other things done.

Note: Due to the aforementioned note that this was written under the assumption I could post this AFTER the changes take effect, it's written under the assumption the changes are retroactive. If they aren't true at time of typing, they'll eventually be over time, and they're definitely true for posts made or reblogged after the change announcement.

-

The following changes are in effect moving forward, and gradually being retroactively applied:

Fire Emblem is now a tag that'll be added into every Fire-Emblem-related post in addition to splitting it by series. It'll also will (and previously had) applied to posts so generally fire-emblem-related that didn't apply to any particular series (hence why this was a long overdue change).

For the curious, we currently have the following Fire Emblem tags:

-- Fire Emblem Mystery of the Emblem (Shadow Dragon is not tagged for and is folded into this)

-- Fire Emblem Echoes (Gaiden is not tagged for and folded into this)

— Fire Emblem Genealogy of the Holy War / Fire Emblem Thracia 776 (Fire Emblem Genealogy has been retired, characters will be covered in both regardless of proper game appearance)

— Fire Emblem Binding Blade (Also now shares Fire Emblem Blades to bridge with Blazing Blade)

-- Fire Emblem Blazing Blade (Also now shares Fire Emblem Blades to bridge with Binding Blade)

-- Fire Emblem Sacred Stones

-- Fire Emblem Path of Radiance / FEPoR / Fire Emblem Radiant Dawn / FERD (Characters will be covered in both regardless of proper game appearance. Also while FEPoR and FERD will be retired, they'll be replaced and condensed with Fire Emblem Radiance)

-- Fire Emblem Awakening

-- Fire Emblem If / Fire Emblem Fates

-- Fire Emblem Three Houses / F3TH (FETH is changed to the more popular FE3H while FETH will be retired. Due to for the most part Shez and Arval being the only outliers, Three Hopes is not tagged for and is folded into this)

-- Fire Emblem Engage (Emblems have their own collective tag as Emblems on top of emblem alts are now noted separately, but their home series and main tags will still be used too. ex: Marth and Emblem Marth count as this AND Mystery of the Emblem.) For non-canical emblems, there's an additional tag of OG emblems on top of tagging them as emblems (ex: Emblem Bruno on top of OG Emblems and Emblems) to collectively find them easier.

-- Fire Emblem Heroes / FEH (Feh the owl is the tag to differentiate the owl of the same name from the game. Note too this will take priority for characters originating from Heroes/FEH, gameplay moments, the Everyday Life manga, or other things applying to the game. Characters will otherwise tag for their home series, even if their alts or outfits are Heroes-exclusive. ex: Bride Ninian is filed under Blazing, not Heroes)

-- Tokyo Mirage Sessions (note that Mirage alts are noted separately, but their home series and main tags will still be used too. ex: Virion and Mirage Virion counts as this AND Awakening.)

-- Fire Emblem 64 / Fire Emblem The Sword of Seal (separate from Binding/Blazing/Stone, it's that lost beta FE game)

-- Fire Emblem Warriors (The first game, see above about Three Hopes)

— Fire Emblem Cipher (card art and characters exclusive to this thereof)

— A Day in the Life of Heroes (the official FEH manga)

Sailor Moon has been overhauled. From now on Sailor Moon applies to the magical girl/senshi. Pretty Guardian Sailor Moon applies to the series as a whole. (Note that while Sailor Moon Crystal and Sailor Moon Saban are also tagged for, they do NOT override Pretty Guardian Sailor Moon. There'll also not be any split between manga and anime.)

'OP's OCs' is being retired in favor of OCs and Other's OCs. The reason for this is that I worried it'll cause confusion when applied as intended (generally any OC that's literally not mine) in the event of certain specific scenarios where it becomes a misnomer out-of-context for those who didn't follow the blog long enough to know the tag's actual useage (such as gift art and/or commissions where it were OCs related to the stated recipient on-post, but not, in fact, the OP's OCs). Hopefully this clarifies things. (Note that fandom-specific OC tags still apply as usual.) OCs will also include ANY OC, including my own, whereas previously my own OCs had their own dedicated tags (ex: Summoner OC Eclair). Adding to ease of use, my own OCs now have a general My OCs tag too.

In addition, certain fandom-specific OC tags were tweaked for better consistency, including Palworld (Pal Tamer OC, not to be confused with Pal OC which are OCs who are based on species of pals over humans), Digimon (Tamer OC, ditto, Digimon OC are OCs based on species of digimon over humans) and Animal Crossing (Villager OC for animals, Player OC for human-villagers)

Kiran now exclusively applies to default Kiran, canon Kiran, or instances the official Kiran is present or shown. Summoners who are clearly meant to be OCs who happened to be named 'Kiran' will remain exclusively in Summoner OC. There may be overlap in grey areas (indistinct summoners not named, named 'Kiran', whose OC status is unconfirmed or ambiguous, and indistinct from hooded-default-Kiran. Other (unhooded) Kirans based on the character select options will just plain be counted as Summoner OCs to avoid confusion of trying to otherwise separate them as kiransonas (Corrinsona, Robinsona, and any other -sona based on an existing player character otherwise, still remain.)

Grima, who previously had two tags denoting Grima (generally anything related to Grima be it directly or adjacently) and Grima Robin (specifically fallen-Robin or Robin clearly possessed by or turning into Grima), now has Dragon Grima as a tag on top too (Grima's dragon form specifically). Similarly, Chrom has a new tag of Risen Chrom (fallen Chrom alt or adjacently-themed otherwise).

As with our previous poll results, Nil now has been changed to Rafal when relevant. (Nil posts that actually feature Nil remain untouched.) However, despite also being an example, The Player will NOT be changed, as Quiet would erroneously be mixed up with another Quiet (Metal Gear) and The Long Quiet has certain distinctions enough to make it stand out on its own (like The Princess and the Dragon or Happily Ever After).

Minecraftubers post will now also include MCYT, the most popular tag usually assigned to minecraftuber-related things. Minecraftubers, however, will still remain alongside it.

In addition, MCYT Pets will also be added to minecraftuber pets to search for specifically, on top of Minecraftubers and MCYT. The pets of the minecrafters will still be posted alongside the tags of their owners as usual. (give or take may-as-well-be-related-like-second-owners such as cases like Gus, who is Philza's neighbor's cat, but will still be tagged with Philza anyways)

Minecraft mob tags were cleaned up so that minecraft mobs have their own tags to separate them from their real life counterparts now (ex: minecraft cat / minecraft wolf / minecraft bee / etc versus cats / wolves / bees / etc) Exceptions do apply however…

-- Jellie is still Jellie and has her own tag, so she isn't tagged for as 'minecraft cat' unless there were also other cats present, even if it's Minecraft-Jellie over real-life-Jellie. If minecraftubers also made in-game equivalents of their pets (ex: Pearl's Nugget, Jimmy's Norman, Grian's Maui, etc) they'll be tagged for them, not as 'minecraft cats/wolves/etc' as well.

-- If the context is clear enough, Pearl's wolf Tillie has her own distinct tag from "minecraft wolf".

-- Generally, if they have a named pet, and it's clear they're supposed to be that pet, it'll go with the pet's name tag rather than the minecraft mob tags.

-- If the context is clear it's Minecraft Story Mode related, Reuben will be tagged separately from "minecraft pig".

Tech now specifies either what tech specifically is shown (ex: gamecube, 3ds, etc) or a general idea (computers, phones, etc). Tech will still remain alongside them otherwise.

Posts where I dumped lore in tags via post prompt now have the tag Tag Lore added to them for easier finding. (Before, they end up not tagged for and are easier to miss after the moment compared to Suddenly Lore which documents posted lore).

Similar to the reason OP's OC retired, Commissions is now Commissions and Stores to better clarify the tag's meaning. There's also a split between Commissions and Stores specifically now.

Hey! Just a head's up, but I'm doing some spring cleaning in the Fire Emblem tags. From now on, all Fire Emblem games will have 'Fire Emblem' in addition to their given home series, and while this had been a long time coming, I'm making moves to wrangle the tags so stray general-FE posts finally don't get lost in obscurity, and there's now a tag you can view ALL Fire Emblem related posts regardless of series, rather than by series only.

With that being said, I'm making very recent shifts while doing this, and also working on gathering all affected posts to go through later, so what this means for all of you is you might see certain weirdness on what is and isn't counted in the tag right now between ancient posts of reblogs past getting it, but not-so-much very-recent ones.

This is in large part because I might be stupid I'm a creachur of habit I'm making it into a new habit and during the transition period, if you don't see a post you know should go into the tag, but didn't yet, expect to see it in the coming days eventually, cuz the order I'm doing it in will look pretty chaotic in the initial interim.

4 notes

·

View notes

Text

Remember, there’s more to backing up a Tumblr than the posts! Make sure to grab copies of your:

Drafts (Wordpress will include these in their Tumblr export, or you can post them and then use any method that backs up posts, or copy them manually into some notepad program)

Queue (I don’t *think* Wordpress includes these, but there’s still posting them or manual copying; I pretty much never use my queue, so I don’t have any experienced techniques for archiving it)

Inbox (I paste mine into a Word document: the formatting is a bit off, but it’s still fairly readable, and you can always clean it up)

Outbox (same method as inbox)

PM logs (I separate these into one Word document per person)

List of followed blogs, to help you consider which other blogs to archive† or to find elsewhere (there might be a cleverer way to do this, but I simply went through my list and wrote everything down in another Word document; I also included a note of how many there were in total, so I can tell at a glance if any vanish from Tumblr)

---

†You don’t need to own a blog to use the tumblr-utils backup method [link] on it.

#The Great Tumblr Apocalypse#The Last Tumblr Apocalypse#Tumblr: a User's Guide#101 Uses for Infrastructureless Computers#oh look an original post#(technically I use LibreOffice and not Word but I didn't want to distract from the point)#(the following category tag was added retroactively:)#Wordpress

25 notes

·

View notes

Text

How popular is MadaSaku in context of other Madara’s and Sakura’s ships? Part 2

@madasakuweek

I am honestly shocked how many people liked my ship popularity analysis. Well, prepared for the second part - Fanfiction.net. It’s even longer!!! But imo more interesting.

Finding the popularity of ships on ff.net is both easier and more complicated, depending how do you approach the problem.

Let’s break up the task into two parts.

Approach 1

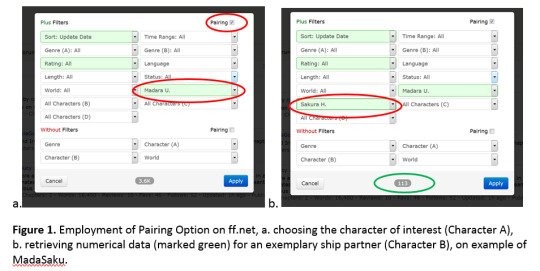

FF.net has a Pairing Option that was introduced in October 2013. This function makes the names of characters appear in square bracket, for example: [Madara U., Sakura H.] indicating that those characters form a romantic pair in a given fic.

When using Filters (Figure 1.a.) after choosing the character of interest, one can tick the Pairing Option, and then browse (using up and down arrows) through all the characters of the fandom. The number of fics tagged with Pairing Option between the character of interest (here: Madara) and every character B (here: Sakura) appear on the bottom of the panel (Figure 1.b.).

Data gathered through browsing through all of the Naruto characters with Pairing Option On give unequivocal results. The only limitations of this method is the relatively late introduction time point of Pairing Option (introduced in 2013, while Naruto started publishing in 1999), and sometimes peculiar definitions of “Characters” on ff.net (example: Naruko as a separate character, “Team 7” as a “Character”).

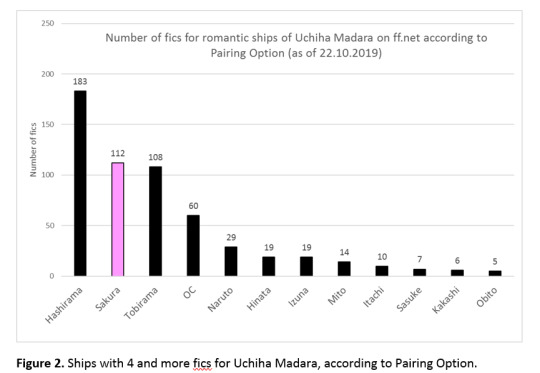

Results for Madara and Sakura are presented below (Figures 2. and 3.) For clarity, ships with four or more fics are shown for Madara. In case of Sakura, all the ships with >4 fics in a graph would blur the picture. Therefore, her Top 20 ships are presented in Figure 3., and the rest is summarized in the Table 1. The analysis was performed on 22.10.2019.

However, ff.net was launched on 15.10.1998 (which precedes publication of Naruto by one year) so there is a 14 year-worth of fic creation when the Pairing Option wasn’t available. To get the complete overview of ship popularity it is necessary to include the body of work created in the earlier years of ff.net.

This issue was addressed through Approach 2.

Approach 2

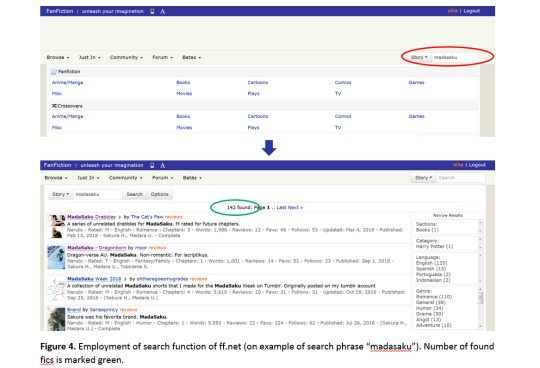

During the period of 1998-2013 fics were labelled with ship names in the summaries, and readers would look for ships of interest typing ship name into the search box. During those times certain conventional phrases were coined for the ships, and putting those in the summary allowed potential readers to find the story. Searching ff.net using selected phrases and collecting the corresponding fic numbers gives insight into those part of fanworks. Employment of search engine is shown in Figure 4. on the example of phrase “madasaku”.

I performed searches for common ships, basing the list on the ships with 4 or more fics as found using Pairing Option (see: section above).

I searched for the phrases that were and still are still commonly used ship names in Naruto fandom.

In general, there are three common ways to indicate a ship:

a) Merger of names, usually using first two syllables of both names, usually (but not always) with male name being first part of the phrase. Example: madasaku, and (less commonly used) sakumada. For the M/M and F/F couples, both orders are used, and it is impossible to predict which form is more common. Example: sakuhina and hinasaku would be used with similar frequency.

In case of names composed of two syllables or shorter, one syllable is often used.

b) Name_1xName_2 – complete names of characters separated by letter “x”, without spaces in between. There is no strict preference as to which name goes first more frequently, i.e. sakuraxmadara is equally plausible as madaraxsakura.

c) A combination of the above, i.e. shortened versions of names connected with an “x”, without spaces (example: madaxsaku)

Search engine of ff.net is case-insensitive, i.e. searching for “madasaku” will bring the same results as searching for “Madasaku” and “MadaSaku” (thank gods).

Other possible naming convention, even if there are/were used, aren’t correctly recognized by the search engine of ff.net. If there are spaces between the words of the phrase (example: “Madara x Sakura”) the search results in all fics containing words “Madara”, “x” and “Sakura” in summary, but not necessary together. Separating character names with slash “/”, even without usage of spaces (example: “Madara/Sakura”) results in list of fic containing “Madara” and “Sakura”, not necessary together (slash symbol gets ignored).

Therefore, the ship names of the kinds described above weren’t included in this analysis.

Other potential ship names variations (using “*”, using “&”, and surely a multitude of others variants that I couldn’t think of) were not included due to their relative infrequency (and limits to capacity and imagination).

For every ship included in the results of Approach 1 (total 43 searches for Sakura’s ships, and 12 searches for Madara’s ships), I searched for the following phrases (on example of MadaSaku ship):

madasaku

sakumada

madaraxsakura

sakuraxmadara

madaxsaku

sakuxmada

Certain ship names don’t follow the usual rules and/ or required special treatment. For example, for the ship Gaara/Haruno Sakura the common names merger is GaaSaku (and not GaaraSaku). Tsunade/ Haruno Sakura is equally often (or rarely, because it is very rare) abbreviated to TsuSaku and Tsunasaku. Fics tagged with TobiSaku name merger may mean Senju Tobirama/Haruno Sakura as well as Tobi (Obito’s persona)/Haruno Sakura – in this case, fics were manually inspected, and assigned to correct categories. Ship with Naruko (which scored relatively high in Pairing Option analysis), was not really possible to assess, because the merger name “narusaku” is identical for the merger of Uzumaki Naruto/Haruno Sakura (for good reasons).

All the fics for given pair were summed up (i.e. for Uchiha Madara/Haruno Sakura ship the fic numbers retrieved through searches for “madasaku”, “sakumada”, “madaraxsakura”, “sakuraxmadara”, “madaxsaku” and “sakuxmada” were added together).

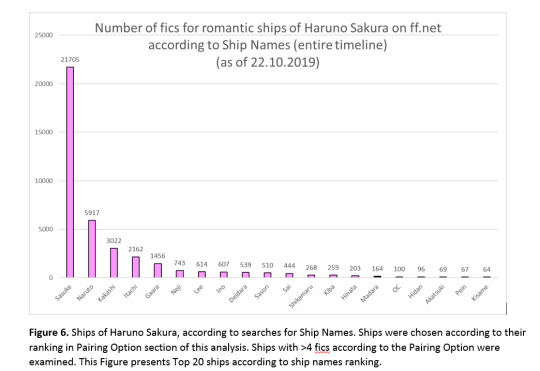

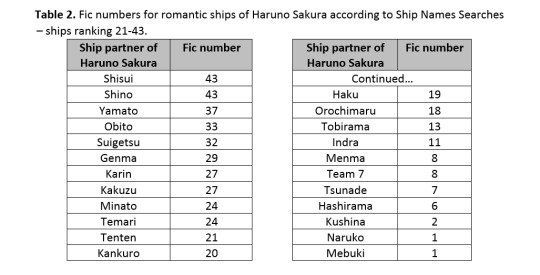

Results for Madara and Sakura are presented below (Figures 5. and 6.). In case of Sakura, including all the examined ships in a graph would blur the picture. Therefore, her Top 20 ships are presented in Figure 6., and the rest is summarized in the Table 2. The analysis was performed on 22.10.2019.

This list presented in Table 2 is not a comprehensive one. There are certainly more ships with <10 fics that were not included. One prominent, detected example is Chōji, who has 13 fics in as a ship partner of Sakura, but was not included in Table 2 because according to the Pairing Option he had no fics with Sakura.

Limitations of the method

Employed method depends on performing six independent searches and summing up the numbers from those searches. A fic tagged with more than one of the searched phrases will be counted multiple times. For example: if a fic contained phrases “MadaSaku” and “MadaraxSakura” in its summary, it was counted twice. In extreme case, one fic would generate six data points: one real, and five false.

Nevertheless, since the same treatment was applied to all the pairings, the errors should be distributed in the same way across all the ships. Therefore, even if the numerical values obtained through this analysis don’t necessarily correspond to reality, the results should reflect respective relations between the ships (i.e. “ranking” should be correct). This assumption is true however only if all the subdivisions of the fandom follow the same customs and tagging etiquette. I.e. if creators writing for SasuSaku followed a habit of including only one Ship Name in their summaries, while creators of MadaSaku tended to include multiple Ship Names, then my method of analysis would overestimate the significance of MadaSaku ship. However, I am not aware of such trends existing in Naruto fandom.

Additionally, Ship Name tagging system allows for tagging infinite number of ships in one fic, therefore certain fics have been counted multiple times.

Discussion

When considering fics tagged with Pairing Option, Sakura ranks as Madara’s second most popular ship partner, after Hashirama and before Tobirama. As on AO3, it is telling that a relatively “exotic” pairing with OC ranks 4th among Madara’s ships.

Madara is the 9th most popular ship partner of Sakura. Comparing and contrasting Sakura’s data between ff.net and AO3 would be an interesting analysis in its own right, but here I will only point out the switch of positions between Naruto and Kakashi as Sakura’s ship partners (Naruto is a strong 2nd on ff.net, while Kakashi takes this position on AO3); and relatively low, 7th place of Ino (strong 4th on AO3).

When taking into account data gathered though Ship Names Searches, Sakura ranks as Madara’s 2nd most popular ship partner (after Hashirama and before OC).

Madara, on the other hand is Sakura’s 15th most popular ship partner. Since Ship Names Searches results are mostly derived from fics published in earlier years in Naruto fandom, his lower place can be explained by his very late appearance in the franchise (on-panel in February 2008 - almost 9 years after Naruto started publishing and in person in the story in October 2011 - 12 years after begin of publishing).

Data gathered in this analysis present a unique opportunity for looking into the development of ship popularity over time.

Results from Pairing Option reflect mostly the state of fandom in years 2013-2019, while the results from Ship Names Search show the status from the earlier years. The exact division is however somewhat blurred as some fics published before October 2013 has been retroactively tagged using Pairing Option (even if the majority of older fics remain untagged). The reverse is also true – many fics published after introduction of Pairing Option, even as recently as end of 2019, fail to use it. Additionally, many fics are tagged using both systems: the Pairing Option and Ship Name in summary.

Nevertheless one can tentatively regard Pairing Option results as “new”, or “current” ones, while the ones coming from the Ship Names Searches as “older” ones.

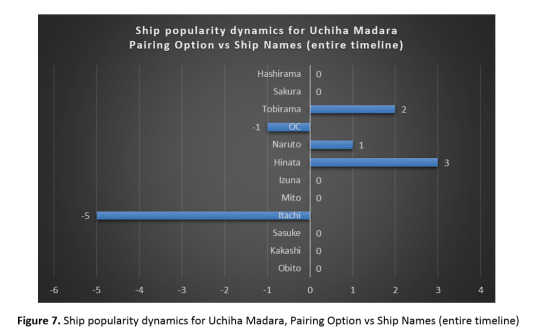

Figure 7. presents position changes in Top 12 Madara’s ships. Ranking from Pairing Option analysis was regarded as current and compared to the “older” ranking composed from all the fics tagged with Ship Names (regardless their publishing date). A positive value in the Figure 7. indicates a gain of popularity, for example: Tobirama gained 2 positions among Madara’s ships when comparing the “current” and “older” results.

This analysis reveals that certain ships (with Tobirama, Naruto and Hinata) gained, while ships with OC and Itachi lost on popularity. Dramatic loss of Itachi ship popularity is very interesting and one can speculated that it could be caused by Tobi/Obito reveal.

The same analysis was performed for Sakura’s ships and is presented in Figure 8.

Inspecting changes in Sakura ships’ rankings allows for several interesting observations. Minato, Shisui, Madara and Sasori are among greatest winners. It is symptomatic that all, except Sasori, are characters that appeared (or became relevant) later during the franchise. Among the characters losing in popularity as Sakura’s ship partners are members of Konoha Eleven (Lee, Kiba, Sai, Neji), an Akatsuki member (Deidara) and OC. It should also be noted that five top positions (Sasuke, Naruto, Kakashi, Itachi and Gaara) remained unchanged.

To further examine the “current” and “older” state of fandom, upon harvesting Ship Names data I sorted the fics according to publication date and took note of how many fics were published before and how many after 01.10.2013 (approximate date of introduction of Pairing Option tool).

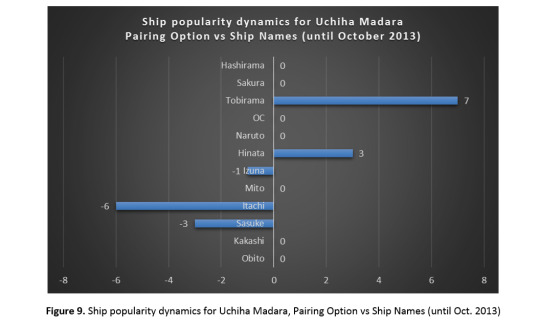

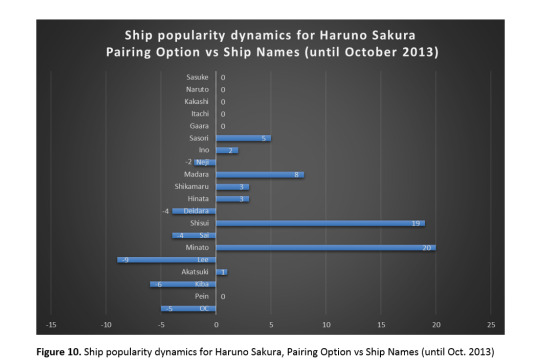

If one repeats the analysis from Figures 7. and 8. considering only fics published before 01.10.2013 as “older” ones, the following pictures appears (Figure 9. and 10.):

Using this definition of “older” fics (i.e. fics tagged with Ship Names that were published until October 2013), the gain of popularity of Tobirama ship becomes even mor evident (gain of 7 positions). Ships with Itachi (as in the previous analysis) and Sasuke (undetected in the previous analysis) lost on popularity.

Sakura ships’ dynamics according to this definition of “older” fics show even more pronounced position gains of Minato and Shisui, but otherwise similar trends to previous analysis.

Analysis of ship popularity on ff.net is a challenging one, but since this platform was, and still is main archive for Naruto fandom (407 thousands fics vs 49 thousand on AO3) it is important to address this issue even if methodology is far from perfect.

I am open to all the comments, suggestions and hints, as the data collection was a major effort and I’m sure that this data can be used in many other different ways.

56 notes

·

View notes

Text

Pornhub’s Content Purge Has Left Fetish Creators Wondering What’s Next

Before the purge that disappeared more than 75 percent of content on the platform, Pornhub hosted a lot of videos and photos that weren’t humans having sex. There were full-length movies, memes, and video game playthroughs that you might see on a non-adult site like Twitch, but there was also a ton of animation, 3D renderings, audio erotica, music videos, fanfic from furries and bronies, and stop-motion animation like LEGO minifigs fucking.

Pornhub became a dumping ground and safe harbor for a lot of stuff, and a lot of these creators didn't necessarily want to upload a photo of themselves to a huge porn corporation's database in order to get verified. They were just throwing things on the site for fun, to share with others in their respective communities, and the wider world. Compared to a site like Milovana (an adult message board and the birthplace of Cock Hero, videos of which are mostly gone from Pornhub now) or the furry fan art forum e621, Pornhub was a way to reach a more mainstream audience. With last week’s action, a lot of that stuff is now gone.

For victims of abusive imagery and non-consensual porn, as well as anyone who's had to deal filing takedown requests for pirated content uploaded to Pornhub, the removal of unverified content is a positive: between Pornhub's new policy for only allowing content partners and performers in the model program to upload and download, and the retroactive suspension of all this content pending review, the platform seems to be making long-overdue changes that sex workers and victim advocates alike have asked for. But by applying a blanket solution to a complex problem, it's caught small, independent creators from niche communities in its net.

Several creators told me that Pornhub's damage-control scramble has created issues for verified users, locked many unverified creators out of their own content, and left many more wondering whether there's even a future for indie and fetish works on the site.

“It was a betrayal”

In a month when sex on the internet is being attacked from all sides—from Instagram's new terms of service, to TikTok kicking sex workers off the platform, to payment processors leaving Pornhub—some creators are concerned that losing one of the most popular porn sites in the world as a platform is another blow against fetish and outside-the-mainstream content on the internet as a whole.

For a lot of creators, Pornhub's melting pot was a source of inspiration for artists, Lifty and Sylox, hosts of the Furry Frequencies podcast, told me in an email. "Many of the videos that were uploaded onto Pornhub from the furry community were sexual videos of furries partaking in sexual acts in fursuit," they said—which could include videos of furries in fullsuit with “strategically-placed holes” performing solo or with one or more partners. "Some furries perform with just their fursuit head, handpaws, and feetpaws to provide better nude content. An unverified, but significant amount of this content catered to specific fetishes of the furry community, such as feet fetishism or watersports."

"Furries won't abandon PornHub immediately," Lifty and Sylox said, noting that more creators will likely migrate to Onlyfans or communities like Furaffinity to post content. "Changes like this tend to take time before the effects can be measured… PornHub's status as a repository for one-stop furry porn content will eventually diminish significantly."

It's not just illustrators and furries who have lost Pornhub as a platform in the last week. Audio erotica creator Goddess By Night told me that she lost all of her content—about 40 videos. She's been making audio erotica for five years, and in the last two she'd made a business out of it. She makes Gentle Female Dominant and Dominant Mommy-themed stories, as well as Futanari role play and other kink-related fantasies.

"Most of my work is a niche within the adult entertainment industry, and Pornhub allowed me to reach a broader audience, so it’s a pretty significant loss," she said. "However, my community has been incredibly supportive and intend to follow me to the next platform(s) I choose. I don’t plan on returning to Pornhub because of this. It was a betrayal, especially to the loads of creators they explicitly welcomed after Tumblr’s ban two years ago. I know some creators who lost work that they may never get back because Pornhub didn’t offer a grace period."

Each of the creators I talked to, whether they were verified or unverified, said that they weren't given any warning before Pornhub's content suspension took place. Pornhub used the word "suspension," not "deletion," and told Motherboard at the time of the suspension announcement that this meant content would be "removed pending verification and review."

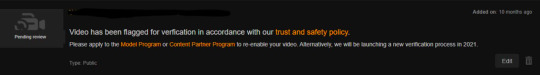

Creators whose content was removed saw a message in place of their uploads that explained the video was "flagged for verification" and invited them to apply for the Model Program or Content Partner Program in order to re-enable the videos, or wait for the new verification process to start in 2021.

They were locked out of their own content at that point and were unable to even download it from the platform.

When Tumblr removed all NSFW content from its platform in December 2018—similarly for allegations it hosted child sexual abuse imagery, but also to appease Apple—the social media platform gave creators about two weeks of notice to get their stuff off the site. Pornhub's announcement came at 7 a.m. EST on a Monday and went into effect immediately. By the time most people saw the news, the suspensions were underway, and more than 10 million uploads were gone by 9 a.m.

This is especially bitter news for creators who, in 2018, took Pornhub up on its invitation to move to the platform from Tumblr.

"Amusingly [Pornhub was] very happy to take advantage of the Tumblr refugees back when all that went down, inviting us to upload all our galleries there," adult content illustrator IzzyBSides told me in a Twitter message. "I think most of us on Twitter know we're living on borrowed time, people have backup accounts set up and occasionally plug them to their followers letting them know to follow it in case their main account randomly disappears overnight."

Because the mass suspensions were meant as a broad solution to get unlawful, abusive content on the site under control, allowing users to download their content would miss the point of stopping the spread of any abusive imagery. But the move also meant that a lot of content that wasn’t abusive and fell well within Pornhub's terms of service was removed in the process. People didn't have the chance to choose whether they wanted to pack their bags and go elsewhere. If they want to recover their own content, they'll have to play by Pornhub's verification rules, which have yet to be announced and won't start until sometime early next year.

One animator who asked to remain anonymous told me that they lost about 20 videos, "including story animations, which I loved very much …This was sad for me as the videos were deleted without warning," they said, but added that they luckily had a backup of their videos saved to their hard drives. "However, I didn't have any income from Pornhub, so it's okay, the videos are saved and I'll just move them to another site."

That illustrator wasn't verified, but even being a verified user didn't save some people from the purge. Pornhub's policy changes were intended to skip over verified accounts, but some users still saw their verified content taken down.

“Sex workers are under attack everywhere”

Another confusing aspect of Pornhub's cleaning spree is the effect it has had on verified creators and performers. Many have reported on Twitter that some of their verified content has been disappeared, seemingly at random, even while other uploads have stayed online. Others in the comments of Pornhub's own verification policy page say that they were verified, but now they aren't. From the outside, there seems to be no consistent reasoning for this.

Before the policy changes, there were three types of verification, according to Pornhub: Content partners, users in the model program, and verified users. The last category is now gone, and only uploads by models and content partners remain. The users reporting verification issues were likely in that last category—verified based on the old standards Pornhub used, which involved sending Pornhub a selfie with your face and holding up a sheet of paper with your name written on it. Those users are all now unverified. But inconsistencies remain.

Riley Cyriis, a performer who's been verified on Pornhub for more than a year, told me that most of her free videos and around 40 of 120 paid videos were removed, along with 20 videos she had set to private, only viewable by her.

"My best guess would be certain tags, like 'teen' or 'daddy,' but it's really just a guess," she said. "The majority of flagged videos were my most successful ones ranging from 200k to 700k views, so maybe it's just how they came up? My profile is pretty obviously made by a real person and I listed my age publicly."

She wasn't using Pornhub as a main income source (although many performers do), so she's planning to focus more on other platforms like Onlyfans and Manyvids.

Pornhub has said that it will restart the verification with new requirements in 2021. But the gap is a long time to wait if you're losing an audience and relied on the site for income. Many performers have already lost significant income due to Mastercard and Visa's decision to drop Pornhub. Performer Mary Moody said in a video about the payment processing news that she was making enough money from Modelhub to cover rent each month.

And the verification process, which still hasn't been clarified publicly by Pornhub, could bring up new issues for anyone who wants to get their content back. IzzyBSides said that they'd received verification rejections before Pornhub's content purge, because their avatar—a fire sprite—obviously doesn't match their real-life face. The reason Pornhub gave for their rejection the last time, before the content suspensions, was that their avatar didn't match their verification photo. "We need to see your face to confirm," Pornhub's customer service email to them said. According to Pornhub, this method of verification is now outdated.

It's unclear how verification will work in the future, but Pornhub has said that identification of some kind will be part of the process. There are obviously many reasons that not everyone would want to use their real face as their avatar on a porn website.

"I'm not sure how I'm supposed to get verified with those sorts of requirements," IzzyBSides said, adding that performers who wear masks or keep their faces cropped out of videos would be excluded from verification on these terms. "It would force anyone experimenting out of the closet."

I asked performer Dylan Thomas how a verification system with even more strict requirements for identity could impact trans, non-binary, and gender non-conforming content creators and performers. He said Pornhub could avoid excluding these groups by consulting with, and hiring, them to help create the new system.

"Some of us with intersectional experience in both creative strategy, the digital space and sex work would like nothing more than to serve our community and get everyone back online, generating income and having an enjoyable, safe and sexy time," he said.

How Pornhub’s new verification policies and process will unfold in practice is yet to be seen, but by including the voices of people who use the site, it could avoid future mistakes—just as it could have avoided this month’s backlash—and listen to the sex workers and content creators who've been asking for things to change for a long time.

"Pornhub was blatant about their disregard to what appeared on the site. But sex workers are under attack everywhere," Cyriis said. "Aside from the payout issues caused by Visa/MC, YouTubers and celebrities are flicking onto our platforms and basically doing whatever they want with no real financial repercussions. The consequences fall squarely on the shoulders of sex workers who built these platforms."

Pornhub’s Content Purge Has Left Fetish Creators Wondering What’s Next syndicated from https://triviaqaweb.wordpress.com/feed/

0 notes

Text

The Complete Guide to Facebook Privacy

Facebook has never been particularly good at prioritizing your privacy. Your data powers its business, after all. But recent revelations that a firm called Cambridge Analytica harvested the personal information of 50 million unwitting Facebook users in 2015 has created new sense of urgency for those hoping for some modicum of control over their online life. If you ever needed a wake-up call, this is it.

The good news: Despite the repeated, public privacy lapses, Facebook does offer a fairly robust set of tools to control who knows what about you—both on the platform and around the web. The bad news: Facebook doesn't always make those settings easy to find, and they may not all offer the level of protection you want.

Fear not! Below, we'll walk you through the steps you need to take to keep advertisers, third-party apps, strangers, and Facebook itself at bay. And if after all that you still feel overly exposed? We'll show you how to walk away entirely.

Keep Apps in Check

Over the years you've used Facebook, you've probably given various apps permission to tap into its data trove. And why not? At the time it's a simple enough request, a way to share photos more easily, or find friends across the app diaspora.

In doing so, though, you're granting developers deep insight into your Facebook profile. And until Facebook tightened up permissions in 2015, you were also potentially letting them see information about your friends, as well; Cambridge Analytica scored all that data not from a hack, but because the developer of a legitimate quiz app passed it to them.

So! Time to audit which apps you've let creep on your Facebook account, and give the boot to any that don't have a very good reason for being there. That's most of them.

On a desktop—you can do this on mobile as well, but it's more streamlined on a computer—head to the downward-facing arrow in the upper-right corner of your screen, and click Privacy. (You're going to spend a lot of time here today.) Now go to Apps, and gaze upon what your wanton permissions-granting hath wrought.

On this page, you can see a list of apps with access to your Facebook profile information.

OK, so maybe it's not that bad. Or maybe it is! I have friends who discovered well over a dozen apps lurking within the Logged in with Facebook pane; I only have four, but that's because I did some spring cleaning recently. Either way, you can see not only what apps are there, but how much info they're privy to. For instance: I haven't used IFTTT in years, but for some reason it has access to my Friend list, my timeline, my work history, and my birthday.

To revoke any of those permissions, go over and click the pencil. To scrap the app altogether, hit the X. You'll get a pop-up asking if you're sure. Yes, you're sure. Click Remove to make it official.

An important note here: Those developers still have whatever data about you that they've collected up to this point. You have to contact them directly to ask them to delete it, and they're under no obligation to do so. To at least make the attempt, find the app on Facebook and send them a message. If they ask for your User ID, you can find that back on the Apps page by clicking on the app in question and scrolling all the way down.

It feels like you should be done now, but you're not. From that same Apps page, go down just a smidge further to Apps, Websites, and Plugins. If you don't want Facebook bleeding into any other part of your online experience—that's games, user profiles, apps, you name it—then click Disable Platform. This could have unintended consequences, especially if you've used Facebook to login to other sites! Only one way to find out, though.

And then scroll down just one more teensy bit to Apps Others Use, where you'll see about a dozen bits of information about you, like your birthday, or if you're online, that your friends might unwittingly be sharing with apps and websites. Uncheck anything you don't want out there in the world, which is honestly probably all of it.

OK, now you're done. With apps. There's still a lot left, though.

Bad Ads

Back to the Settings panel! This time head to Ads, which you'll find right below Apps. (The fact that neither of these falls under Security or Privacy should tell you all you need to know about Facebook's disposition here.)

Just to be clear, Facebook—along with Google, and tons of faceless ad networks—tracks your every move online, even if you don't have an account. That's the internet we're stuck with for now, and no amount of settings tweaks can fix it. What you can do, though, is take a modicum of control over what Facebook does with that information.

That pair of shoes that haunts your News Feed, even though you already bought a similar pair? Exorcise them by turning off Ads based on my use of websites and apps.

"Online interest-based ads" are advertisements that rely on access to your browsing activity.

Also say no to Ads on apps and websites off the Facebook companies, which covers all the non-Facebook parts internet where the company serves up ads—which is pretty much everywhere. Then head straight down the line to Ads with your social actions, which you should only leave on in the event you want to share with the world that you accidentally clicked Like on that sponsored post from a furniture company that probably exists only on a server in Luxembourg.

And for some fun insight into what Facebook thinks you're into, click on Your Interests. There you'll find the categories that Facebook uses to tailor ads to your Liking. You can clear out any that bother you by clicking the X in the upper-righthand corner when you hover over, but mostly it's a fun lesson in how digital advertisers distill your essence. You'll also likely find at least one surprise; Facebook thinks I'm into IndyCar, which honestly, maybe, if I'd only give it a chance.

Please remember that none of this will in any way change the number of ads you see on Facebook or around the web. For that, you'll need an ad blocker.

Friends Focus

After a decade on Facebook, you've likely picked up friends along the way you no longer recognize—not just their profile picture, their name and context. Who are all these people? Why are they Liking my baby pics? Why aren't they liking my baby pics?

To get a handle on who can see which of your posts, it's finally time to head to Settings then Privacy.

Start with Who can see my posts, then click on Who can see my future posts to manage your defaults. You've got options! You can go full-on public and share with the world, or limit your circle by geography, employers, schools, groups, you name it. Whatever you pick will be your default from here on out.

Whatever you pick, immediately go to Limit the audience for posts you've shared with friends of friends or public? to make that choice retroactive. In other words, if you had a public account until now, changing your settings won't automatically make your past posts private. You have to get in a few extra clicks for that.

Not everyone you know needs to see everything you do.

Skip ahead down to How People Find and Contact You, since that's thankfully pretty straightforward. Tweak all the settings to your liking. The main note here: Don't share your email or phone number unless you absolutely have to, and if you do, keep the circle as small as possible. (If you do have to share one or the other with Facebook for account purposes, you can hide them by going to your profile page, clicking Contact and Basic Info, then Edit when you mouse over the email field. From there, click on the downward arrow with two silhouettes to customize who can see it, including no one but you.)

And while we're almost done with this part, first we have to talk about tagging. If people want to tag you on Facebook, there's not much you can do about it. Sorry! But you can at least stop those embarrassing pics from showing up in your timeline. Enable the option to Review posts you're tagged in before the post appears on your timeline so you can clear anything out that you'd rather not see there.

Then, head to Timeline and Tagging in the left-hand menu. There you can limit who can post to your timeline, who can see which posts, who can see what you're tagged in, and so on. Your tolerance here will vary depending on how active a Facebook user you are and how obnoxious your friends can be, but at the very least it's helpful for setting custom audiences that exclude people—your boss, maybe, or an ex—you definitely don't want taking an active role in your Facebook experience.

To test out those changes, head to Review what other people see on your timeline, where you can see what your account looks like through the eyes of a set of people or a specific friend.

One last thing: You'll see a Face Recognition option in the left-hand menu pane as well. It has some genuine uses, like letting you know if someone is using a photo of you in their account for trolling or impersonation. But if you're fundamentally more creeped out by Facebook's algorithms hunting for your face than by potential human jerks, go ahead and switch it off.

What About Russians?

While it still sounds like the subplot to a lesser Die Hard installment, dozens of Russian propagandists really did infiltrate Facebook a few years ago. Did you follow or like one of their accounts? Find out for sure here, assuming Facebook doesn't once again upwardly revise the number. And then find a way to get that link in front of your aunt. You know which one I mean.

Is Facebook Listening To Everything I Say?

By this point, it's a trope: You have a casual conversation about umbrellas with your roommate—as one does—and a few hours later, umbrella ads flood your News Feed. Surely this means Facebook's using your smartphone's mic to eavesdrop, right?

Well, no, sorry! As we've explained here and others have investigated elsewhere, Facebook's not actually hijacking your microphone. For starters, it would be wildly impractical not only to sort through all that data, but to figure out which words meant anything.

Besides, worrying about Facebook eavesdropping distracts from the far more concerning fact that it doesn't have to. The things you and your friends do online, and where you do them, and when, and how, and from what locations, all form more than enough of a profile to inform ads that feel like Facebook isn't just listening in on your conversations, but on your private thoughts. So, please do feel better about the mic thing, but much, much worse about the state of internet tracking, targeting, and advertising at large.

Going Nuclear

If even scrolling through all of these settings tweaks has left you exhausted, much less actually implementing them, you do have a more efficient option: pulling the plug altogether.

Before you do this: First, do recognize that this won't solve all of your online ad woes. You'll still be tracked, targeted, and so on across the web, both by Facebook and other ad networks. They'll all have that much less info to work with, though! So that's something.

And second, if you do decide to go through with it, think about downloading your account first. There's no reason to lose all those photos and statuses and such. To preserve those memories offline, head to Settings > General Account Settings > Download a copy of your Facebook data and click Start my archive. Facebook will email you with a download link when it's ready, which you should pounce on since it'll expire eventually.

OK all set? Here we go. Head back to Settings again, where you'll start in General. Click on Manage Account, scroll past the grim "what happens to my social media presence when I die" bits, and click Deactivate my account. You'll need to enter your password here, look at photos of friends who will "miss" you, take a quick survey about why you're bailing, and then click Deactivate one more time.

There are several steps to deleting your account. Be sure to complete all of them.

Please note that you have not yet actually deleted your account! You've just put it in hibernation, in case you ever decide to come back. For full-on deletion—which means if you do decide to go back you'll have to start from scratch—head to this link right here. That'll put you just a password entry and a CAPTCHA away from freedom. There's a delay of a few days though, and if you sign back on in the interim, Facebook will go ahead and cancel that deletion request. So stick to your guns, don't log in, and maybe delete the Facebook app from your phone just in case.

And that's it! You're clear, at least until Facebook changes its privacy options once again. Whether you decide to stay or leave, the important thing is to take as much control over how your data gets used as possible. Sometimes that's still not a lot—but it's something.

That's What Friends Are For

A recap of the week a hurricane flattened Facebook

Cambridge Analytica's targeting efforts probably didn't work, but Facebook should be embarrassed anyway

If you want to be done with Facebook altogether, remember that it owns Instagram too

Related Video

Security

How to Lock Down Your Facebook Security and Privacy Settings

The only way to be truly secure on Facebook is to delete your account. But that's crazy talk! Here's how to lock down your privacy and security and bonus, keep targeted ads at bay.

More From this publisher : HERE ; This post was curated using : TrendingTraffic

=> *********************************************** Article Source Here: The Complete Guide to Facebook Privacy ************************************ =>

Sponsored by SmartQuotes - Your daily smart quote

=>

This article was searched, compiled, delivered and presented using RSS Masher & TrendingTraffic

=>>

The Complete Guide to Facebook Privacy was originally posted by A 18 MOA Top News from around

0 notes

Text

How to set up event tracking in Google Analytics

Event tracking is one of the most useful features in Google Analytics.

With just a little bit of extra code, you can capture all kinds of information about how people behave on your site.

Event tracking lets you monitor just about any action that doesn’t trigger a new page to load, such as watching a video or clicking on an outbound link. This data can be invaluable in improving your site.

There are two different ways you can set up event tracking in Google Analytics. One way is to add the code manually. The other is to set up tracking through Google Tag Manager.

Both methods are doable without a developer, although you may find it easier to use Google Tag Manager if you have no coding experience.

How to set up event tracking manually

What exactly is an event? Before you start tracking events, it’s important to understand how they’re put together. Each event is made up of four components that you define. These are category, action, label, and value.

Category

A category is an overall group of events. You can create more than one type of event to track in the same category “basket.”

For instance, you could create a category called Downloads to group a number of different events involving various downloads from your site.

Action

An event’s action describes the particular action that the event is set up to track. If you’re tracking downloads of a PDF file, for instance, you might call your event’s action Download PDF.

Label

Your label provides more information about the action taken. For instance, if you have several PDFs available for download on your site, you can keep track of how many people download each one by labeling each separate event with the PDF’s title.

A label is optional, but it’s almost always a good idea to use one.

Value

Value is an optional component that lets you track a numerical value associated with an event. Unlike the first three components, which are made up of text, value is always an integer.

For instance, if you wanted to keep track of a video’s load time, you would use the value component to do so. If you don’t need to keep track of anything numerical, it’s fine to leave this component out of your event.

A table of the four components of an event. Source: Google Analytics

Step one: Decide how to structure your reports

Before you dive into tracking your events, come up with a plan for how you want your data to be organized. Decide which categories, actions, and labels you’ll use, and choose a clear and consistent naming pattern for them.

Remember, if you decide to change the structure of your event tracking later, your data won’t be reorganized retroactively. A little thought and planning up front can save you a lot of hassle down the road.

Step two: Connect your site to Google Analytics

If you haven’t done so already, set up a Google Analytics property and get your tracking ID. You can find your tracking ID by going to the admin section of your GA account and navigating to the property you want to track.

Once you have your ID, add the following snippet right after the <head> tag of each page:

<!-- Global Site Tag (gtag.js) - Google Analytics --> <script async src="https://www.googletagmanager.com/gtag/js?id=GA_TRACKING_ID"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date());

gtag('config', 'GA_TRACKING_ID'); </script>

This code snippet enables Google Analytics to track events on your site. Replace GA_TRACKING_ID with your own tracking ID. Source: Analytics Help

Step three: Add code snippets to elements you want to track

Here is the format for an event tracking code snippet:

ga('send', 'event', [eventCategory], [eventAction], [eventLabel], [eventValue], [fieldsObject]);

After filling in the information that defines the event you want to track, add this snippet to the relevant element on your webpage. You’ll need to use something called an event handler to do so.

An event handler is a HTML term that triggers your tracking code to fire when a specific action is completed. For instance, if you wanted to track how many times visitors clicked on a button, you would use the onclick event handler and your code would look like this:

<button onclick="ga('send', 'event', [eventCategory], [eventAction], [eventLabel], [eventValue], [fieldsObject]);">Example Button Text</button>

You can find a list of common event handlers, as well as a more in-depth explanation on how they work, here.

Step four: Verify that your code is working

Once you’ve added event tracking code to your page, the final step is to make sure it’s working. The simplest way to do this is to trigger the event yourself. Then, check Google Analytics to see if the event showed up.

You can view your tracked events by clicking “Behavior” in the sidebar and scrolling down to “Events.”

Your tracked events can be found under “Behavior” in Google Analytics.

How to set up event tracking with Google Tag Manager

Google Tag Manager can be a little tricky to navigate if you aren’t familiar with it. However, if you’ve never worked with code before, you might find tracking events with GTM easier than doing it manually.

If you have a large site or you want to track many different things, GTM can also help you scale your event tracking easily.

Step one: Enable built-in click variables

You’ll need GTM’s built-in click variables to create your tags and triggers, so start by making sure they are enabled. Select “Variables” in the sidebar and click the “Configure” button.

Enabling built-in click variables, step one

Then make sure all the click variables are checked.

Enabling built-in click variables, step two. Source

Step two: Create a new tag for the event you want to track

Click “Tags” on the sidebar. Then click the “New” button. You’ll have the option to select your tag type. Choose “Universal Analytics.”

Creating a new tag in Google Tag Manager

Step three: Configure your tag

Set your new tag’s track type to “Event.” Fill in all the relevant information – category, action, label, etc. – in the fields that appear underneath, and click “Continue.”

An example of how to configure a new tag in Google Tag Manager. Source: Analytics Help

Step four: Specify your trigger

Specify the trigger that will make your tag fire – for instance, a click. If you are creating a new trigger (as opposed to using one you’ve created in the past), you will need to configure it.

Types of triggers that you can choose in Google Tag Manager

An example of how to configure a trigger. This one fires when a certain URL is clicked. Source: Johannes Mehlem

Step five: Save the finished tag

After you save your trigger, it should show up in your tag. Click “Save Tag” to complete the process.

A tag that is ready to go. Source: Analytics Help

The takeaway and extra resources

Event tracking is one of the most useful and versatile analytics techniques available – you can use it to monitor nearly anything you want. While this guide will get you started, there’s a lot more to know about event tracking with Google Analytics, so don’t be afraid to look for resources that will help you understand event tracking.

Courses like the 2018 Google Analytics Bootcamp on Udemy (which I used to help write this article) will give you a solid grounding in how to use Google Analytics and Google Tag Manager, so you’ll be able to proceed with confidence.

How to set up event tracking in Google Analytics syndicated from https://hotspread.wordpress.com

Advertisements

__ATA.cmd.push(function() { __ATA.initSlot('atatags-26942-5ab02a82462ed', { collapseEmpty: 'before', sectionId: '26942', width: 300, height: 250 }); });

__ATA.cmd.push(function() { __ATA.initSlot('atatags-114160-5ab02a8246328', { collapseEmpty: 'before', sectionId: '114160', width: 300, height: 250 }); });

(function(){var c=function(){var a=document.getElementById("crt-950069040");window.Criteo?(a.parentNode.style.setProperty("display","inline-block","important"),a.style.setProperty("display","block","important"),window.Criteo.DisplayAcceptableAdIfAdblocked({zoneid:388248,containerid:"crt-950069040",collapseContainerIfNotAdblocked:!0,callifnotadblocked:function(){a.style.setProperty("display","none","important");a.style.setProperty("visbility","hidden","important")}})):(a.style.setProperty("display","none","important"),a.style.setProperty("visibility","hidden","important"))};if(window.Criteo)c();else{if(!__ATA.criteo.script){var b=document.createElement("script");b.src="//static.criteo.net/js/ld/publishertag.js";b.onload=function(){for(var a=0;a<__ATA.criteo.cmd.length;a++){var b=__ATA.criteo.cmd[a];"function"===typeof b&&b()}};(document.head||document.getElementsByTagName("head")[0]).appendChild(b);__ATA.criteo.script=b}__ATA.criteo.cmd.push(c)}})();

(function(){var c=function(){var a=document.getElementById("crt-168864112");window.Criteo?(a.parentNode.style.setProperty("display","inline-block","important"),a.style.setProperty("display","block","important"),window.Criteo.DisplayAcceptableAdIfAdblocked({zoneid:837497,containerid:"crt-168864112",collapseContainerIfNotAdblocked:!0,callifnotadblocked:function(){a.style.setProperty("display","none","important");a.style.setProperty("visbility","hidden","important")}})):(a.style.setProperty("display","none","important"),a.style.setProperty("visibility","hidden","important"))};if(window.Criteo)c();else{if(!__ATA.criteo.script){var b=document.createElement("script");b.src="//static.criteo.net/js/ld/publishertag.js";b.onload=function(){for(var a=0;a<__ATA.criteo.cmd.length;a++){var b=__ATA.criteo.cmd[a];"function"===typeof b&&b()}};(document.head||document.getElementsByTagName("head")[0]).appendChild(b);__ATA.criteo.script=b}__ATA.criteo.cmd.push(c)}})();

0 notes

Text

How to set up event tracking in Google Analytics

Event tracking is one of the most useful features in Google Analytics.

With just a little bit of extra code, you can capture all kinds of information about how people behave on your site.

Event tracking lets you monitor just about any action that doesn’t trigger a new page to load, such as watching a video or clicking on an outbound link. This data can be invaluable in improving your site.

There are two different ways you can set up event tracking in Google Analytics. One way is to add the code manually. The other is to set up tracking through Google Tag Manager.

Both methods are doable without a developer, although you may find it easier to use Google Tag Manager if you have no coding experience.

How to set up event tracking manually

What exactly is an event? Before you start tracking events, it’s important to understand how they’re put together. Each event is made up of four components that you define. These are category, action, label, and value.

Category

A category is an overall group of events. You can create more than one type of event to track in the same category “basket.”

For instance, you could create a category called Downloads to group a number of different events involving various downloads from your site.

Action

An event’s action describes the particular action that the event is set up to track. If you’re tracking downloads of a PDF file, for instance, you might call your event’s action Download PDF.

Label

Your label provides more information about the action taken. For instance, if you have several PDFs available for download on your site, you can keep track of how many people download each one by labeling each separate event with the PDF’s title.

A label is optional, but it’s almost always a good idea to use one.

Value

Value is an optional component that lets you track a numerical value associated with an event. Unlike the first three components, which are made up of text, value is always an integer.

For instance, if you wanted to keep track of a video’s load time, you would use the value component to do so. If you don’t need to keep track of anything numerical, it’s fine to leave this component out of your event.

A table of the four components of an event. Source: Google Analytics

Step one: Decide how to structure your reports

Before you dive into tracking your events, come up with a plan for how you want your data to be organized. Decide which categories, actions, and labels you’ll use, and choose a clear and consistent naming pattern for them.

Remember, if you decide to change the structure of your event tracking later, your data won’t be reorganized retroactively. A little thought and planning up front can save you a lot of hassle down the road.

Step two: Connect your site to Google Analytics

If you haven’t done so already, set up a Google Analytics property and get your tracking ID. You can find your tracking ID by going to the admin section of your GA account and navigating to the property you want to track.

Once you have your ID, add the following snippet right after the <head> tag of each page:

<!-- Global Site Tag (gtag.js) - Google Analytics --> <script async src="https://www.googletagmanager.com/gtag/js?id=GA_TRACKING_ID"></script> <script> window.dataLayer = window.dataLayer || []; function gtag(){dataLayer.push(arguments);} gtag('js', new Date());

gtag('config', 'GA_TRACKING_ID'); </script>

This code snippet enables Google Analytics to track events on your site. Replace GA_TRACKING_ID with your own tracking ID. Source: Analytics Help

Step three: Add code snippets to elements you want to track

Here is the format for an event tracking code snippet:

ga('send', 'event', [eventCategory], [eventAction], [eventLabel], [eventValue], [fieldsObject]);

After filling in the information that defines the event you want to track, add this snippet to the relevant element on your webpage. You’ll need to use something called an event handler to do so.

An event handler is a HTML term that triggers your tracking code to fire when a specific action is completed. For instance, if you wanted to track how many times visitors clicked on a button, you would use the onclick event handler and your code would look like this:

<button onclick="ga('send', 'event', [eventCategory], [eventAction], [eventLabel], [eventValue], [fieldsObject]);">Example Button Text</button>

You can find a list of common event handlers, as well as a more in-depth explanation on how they work, here.

Step four: Verify that your code is working

Once you’ve added event tracking code to your page, the final step is to make sure it’s working. The simplest way to do this is to trigger the event yourself. Then, check Google Analytics to see if the event showed up.

You can view your tracked events by clicking “Behavior” in the sidebar and scrolling down to “Events.”

Your tracked events can be found under “Behavior” in Google Analytics.

How to set up event tracking with Google Tag Manager

Google Tag Manager can be a little tricky to navigate if you aren’t familiar with it. However, if you’ve never worked with code before, you might find tracking events with GTM easier than doing it manually.

If you have a large site or you want to track many different things, GTM can also help you scale your event tracking easily.

Step one: Enable built-in click variables

You’ll need GTM’s built-in click variables to create your tags and triggers, so start by making sure they are enabled. Select “Variables” in the sidebar and click the “Configure” button.

Enabling built-in click variables, step one

Then make sure all the click variables are checked.

Enabling built-in click variables, step two. Source

Step two: Create a new tag for the event you want to track

Click “Tags” on the sidebar. Then click the “New” button. You’ll have the option to select your tag type. Choose “Universal Analytics.”

Creating a new tag in Google Tag Manager

Step three: Configure your tag

Set your new tag’s track type to “Event.” Fill in all the relevant information – category, action, label, etc. – in the fields that appear underneath, and click “Continue.”

An example of how to configure a new tag in Google Tag Manager. Source: Analytics Help

Step four: Specify your trigger

Specify the trigger that will make your tag fire – for instance, a click. If you are creating a new trigger (as opposed to using one you’ve created in the past), you will need to configure it.

Types of triggers that you can choose in Google Tag Manager

An example of how to configure a trigger. This one fires when a certain URL is clicked. Source: Johannes Mehlem

Step five: Save the finished tag

After you save your trigger, it should show up in your tag. Click “Save Tag” to complete the process.

A tag that is ready to go. Source: Analytics Help

The takeaway and extra resources

Event tracking is one of the most useful and versatile analytics techniques available – you can use it to monitor nearly anything you want. While this guide will get you started, there’s a lot more to know about event tracking with Google Analytics, so don’t be afraid to look for resources that will help you understand event tracking.

Courses like the 2018 Google Analytics Bootcamp on Udemy (which I used to help write this article) will give you a solid grounding in how to use Google Analytics and Google Tag Manager, so you’ll be able to proceed with confidence.

source https://searchenginewatch.com/2018/03/19/how-to-set-up-event-tracking-in-google-analytics/ from Rising Phoenix SEO http://risingphoenixseo.blogspot.com/2018/03/how-to-set-up-event-tracking-in-google.html

0 notes

Text

Query Time Data Import in Google Analytics For Historical Reporting

Query Time Data Import in Google Analytics For Historical Reporting

One of the most important things that you can do to enhance your reporting capabilities in Google Analytics is to add custom information to your reports, and query time Data Import is a powerful tool to help you accomplish this. Google Analytics by itself generates a massive amount of information about your website, the users that visit, how they got there, and what content they viewed. However, there’s only so much that Google Analytics can reliably determine with the simple out-of-the-box implementation. Luckily, there are places that we can store custom information, and few different methods for getting the data we want into Google analytics.

What is Data Import?

The Data Import feature in Google Analytics allows you to upload data from other sources and combine it with your Google Analytics data. It’s just one way to get custom information into GA. Other options can include sending information with the hit on the page, or using Measurement Protocol hits, but those have their own technical challenges, pros, and cons.

When you have a lot of information you want to get into GA – data import is the way to go. We’ve written about the Data Import feature in past – how to do it and how to report on the data you’ve imported.

There are several types of data you can upload, and the uploading process can be done manually in the GA interface or using the API.

Data Import Behavior

You can choose from two different modes of Data Import: processing time and query time. Processing time mode is available for standard and 360 users, while query time mode is available for Google Analytics 360 only.

Processing time data import works by joining data going forward. As hits come in from your website, GA will check it against your uploaded data, connect any extra data that it finds, and then save this data in your report. Uploaded data is permanently married to your GA data, and it will only be added to hits that are collected after the data import was created. If the data is uploaded incorrectly, you cannot fix it or delete it.

Query time data import works differently. This method will refresh the joined data every time that you request a report, or a “query”, and dynamically connecting your GA data with your uploaded data. This means that whatever you’ll see whatever data was most recently uploaded, even if you select a report and date range that happened before you uploaded the data. This is AMAZING.

What are the Benefits of Query Time Data Import?

For 360 users (or anyone considering 360) query time import has some advantages over processing time mode:

You can join data retroactively, which allows you to use almost all of the features and reports in the GA interface for historical analysis.

You can change, fix or delete the uploaded data; it’s only joined with GA data when requested by a report.

Another benefit to using query time import is that you can use the imported data in Data Studio to visualize and measure historic performance. Although Data Studio doesn’t support data joining yet, you can use query time import to join the data within Google Analytics, then visualize in Data Studio.

Data Import Examples

This type of data import works really well when you want to show the current value of something in Google Analytics, and you’re not concerned with keeping historic records in Google Analytics.

Ecommerce

For sites with a huge inventory of products, you could use query time import to upload additional product-specific details. Since you’ll be able to retroactively join these details with products, this is a great option for non-changing values, like color, size, flavor, ISBN, weight, etc., or any other values that would be consistent for a product over time. You probably would not want to use query time import for changing values like price, since a product price can change over time due to discounts or inflation.

Publishers

If you have a large publisher site where content performance metrics are a high priority, you can bring in additional page-level details for analysis, such as category, author, tags, publish date, etc. While you could use processing time mode to upload additional content details later in the day (for example, at midnight, or even automatically every hour), you’d only be able to measure engagement with that article going forward – either the hour after the article is published or the day after.

However, since publishers often see the highest engagement within minutes of publishing a new article or post, you’d potentially be missing data for the peak engagement times with new content. Because query time mode links data historically, it might be a better option to measure total engagement with content from the time that it was published.

Sales

Query time data import can be extremely valuable to sales teams, as well. If you have a CRM like Salesforce, you can upload additional data into Google Analytics to better understand the process that users took to become a qualified lead or sales prospect.

For example, you could upload details like company name, salesperson, industry, etc. into Google Analytics, using the Client ID as the key. You’d need to send this value in both places, so saved in Google Analytics as a custom dimension and into Salesforce, as Dan documents in this post).

Because we’re using query time, we know that data will be joined historically; we can see everything that each company (or lead) did on our website in the past – all of the pages they viewed, events they triggered, and conversions. You’ll need to take efforts to make sure you’re not passing in Personally Identifiable Information – we don’t want to upload names, email addresses, phone numbers, etc.

By filtering the lead data by salesperson, you can create a Custom Report that gets automatically emailed to each individual on the sales team. This can be a great reminder for your sales team to follow up with leads who come back to your site after their original visit, especially if you have a longer sales cycle.

SEO and Social

For SEO or social metrics such as shares, likes, or number of links, you could import data using the page URL as the key. To get this social or SEO data, you’d need to harness the power of a tool that could fetch page-level information from social or SEO platforms.

To give you an example, we’ve connected Supermetrics and its Google Drive add-on option to report on content engagement from social sources like LinkedIn. Because we want to automate uploading this social data into Google Analytics, we’re also using a Google Apps script for the data import process (ask a developer to help with this part).

In this example, we can schedule Supermetrics to refresh the total number of LinkedIn shares for each blog post in our Google Sheet; then, using Data Import, we upload that data to Google Analytics every night. Similar to the use case for sales teams, we can schedule automated Custom Reports for each author so they can get the most up-to-date insight into how their content is performing across social media.

Social shares is the perfect example of a field in Google Analytics where we’d prefer to see the most recent data, or what the current number of shares are. Note that we’re uploading these as Custom Dimensions however, which means that they won’t be treated as numbers in GA. Instead of putting just the numbers, which may change by just a few shares each day, we bucketed our results to get a general idea of which blog posts are getting shared most.

Ready to Use Data Import?

There are a few limitations to know about – query time is currently in Beta, and cannot be used with: Cohort reporting, Multi-Channel Funnels, Realtime reporting, or AdWords cost data.